Dall-E 2

DALL·E 2 is an AI system created by OpenAI that is capable of generating images from a natural language description.

All edits

Critics of the technology say beyond trademark infringements, the system could also threaten the livelihood of artists. DALL·E 2 replicates the style of specific articsartists, even those who haven't consented to their work being used for the model's training.

The name, DALL·E, is a portmanteau of 'Dali'Dali (from the artist Salvador Dali) and Pixar's 'WALL-E'.WALL-E. OpenAI introduced DALL·E in January 2021. DALL·E 2 was launched on April 6, 2022, offering significant improvements compared to the original. DALL·E would, takewhich atook significant time to return images and had issues producing grainy images. DALL·E 2, generates images with greater realism and accuracy as welland aswith 4x greater resolution. Evaluators were asked to compare 1,000 image generations from both DALL·E 1 and DALL·E 2, and 71.7% percent preferred DALL·E 2 for caption matching, and 88.8% percent preferred it for photorealism.

DALL·E 2 allows users to create images and art from a text description. As well as describing the desired image, usersUsers can also describe the style of the image they want. For example, adding "in a photorealistic style," "in the style of..." for a famous artist, or "as a pencil drawing" to the text description. With each input, the model returns four images based on the text description. The images are 1024x1024 and can be downloaded as a PNG file or shared using a URL.

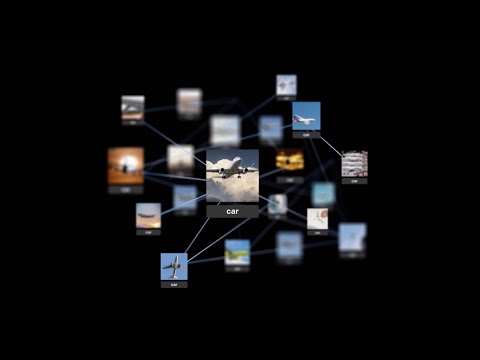

Rather than inputting a natural language description to generate an image, users can prompt DALL·E 2 with an image to create either variations of thethat image or edits based on an accompanying text description. Both variations and edits return three images.

Variations allow users to input their initial generation to get closer to the desired image as welland asalso inputtinginput free-to-use art or self-generated images. Users cannot add a text prompt to guide how DALL·E 2 changes the output.

Edits allow users to provide an image with blank space and a text prompt to complete the image. Edits allowenable users to introduce new elements to existing images, remove the background from images, or put the subject in a new environment. DALL·E 2 only changes the transparent pixels (erased section) within the image.

Outpainting allows users to expand an existing image beyond its current boundaries to create a larger image. With outpainting, it is possible to adjust the framing of images, fill in sections that were originally cropped out, or toand experiment with existing art.

In January 2021, OpenAI introduced DALL·E in January 2021, a neural network that can create images from text captions. A 12 billion parameter version of the GPT-3 model, DALL·E is trained to generate images from text descriptions using a dataset of text-image pairs. Like GPT-3, DALL·E is also a transformer language model, receiving both the text and the image as a single stream of data containing up to 1280 tokens. The model is trained using the maximum likelihood toof generategenerating all of the tokens, one after another.

DALL·E 2, the second iteration of the tool, was launched on April 6, 2022. In order toTo learn about the technology, including how it is used and its capabilities and limitations, DALL·E 2 launched with access initially limited to trusted users only. The phased deployment of DALL·E 2 is intended to allow OpenAI to develop safeguards preventingto prevent harmful image generation and curbingcurb misuse of the tool. During DALL·E 2's initial use, OpenAI asked users to not to share photorealistic generations that include faces and to flag any problematic generations, to limit potential harm.

On April 13, 2022, a team of researchers from OpenAI: AdityaOpenAI—Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen publishedChen—published a paper titled "Hierarchical Text-Conditional Image Generation with CLIP Latents." The paper describes the two-stage model leveraged for image generation. The first stage consists of a prior that generates a CLIP image embedding from a given text caption. CLIP stands for Contrastive Language-Image Pre-training, a neural network developed by OpenAI whichthat can learn visual concepts from natural language descriptions. The second stage is a decoder, using diffusion models, that generates an image conditioned on the image embedding. The paper shows the new image generation model improves image diversity with minimal loss in photorealism and caption similarity.

On May 18, 2022, OpenAI published a research preview update on the early use of the DALL·E 2 beta. The update found the following:

- Users had collectively created over 3 million images.

- Less than 0.05% of downloaded or publicly shared images were flagged as potentially violating OpenAI's content policy.

- Upon human review, roughly 30% percent of flagged images were confirmed to violate policy leading to an account deactivation.

On July 20, 2022, OpenAI announced DALL·E 2 was now available in beta with plans for a million users from the waiting list to be invited to use the platform over the coming weeks. Every user willis to receive 50fifty free credits during their first month with 15fifteen free credits added every subsequent month. Each credit allows for one original DALL·E 2 prompt generation (returning four images) or an edit/variation prompt, which returns three images. During the first phase of the beta, users can buy additional credits in increments of 115 credits for $15. From the release of the beta, users receive full usage rights to commercialize the images they create with DALL·E 2, including reprinting, selling, and merchandising. These rights also apply to previously generated images during the research preview.

On August 31, 2022, OpenAI announced the new outpainting feature, which allows users to extend existing images beyond the current borders, adding new visual elements in the same style or a new direction using a text description.

- Prior P(zi|y), which generates CLIP image embeddings zi conditioned on captions y.

- Decoder P(x|zi,y), which produces images x conditioned on CLIP image embeddings zi and text captions y (the use of text captions is optional).

Previous work using diffusion models has shown using guidance on the conditioning information, improves sample quality rather than directly sampling the conditional distribution of the decoder. Classifier-free guidance is enabled by randomly setting the CLIP embeddings to zero 10% percent of the time and randomly dropping the text caption 50% percent of the time during training. High-resolution images are trained using two diffusion upsampler models, one to generate 256x256 images from 64x64 and one to further upsample to 1024x1024 resolution. The robustness of these upsamplers areis improved by slightly corrupting the conditioning images during training. This includes gaussian blur during the first stage and a more diverse degradation.

While the decoder can invert CLIP image embeddings (zi) to output images (x), to enable image generation from text captions, a prior model is required that canto produce the CLIP image embedding. The DALL·E 2 developers experimented with two types of models for the prior:

The paper showed both models yielded comparable performance; given the diffusion prior is computationally more efficient, given the diffusion prior is computationally more efficient it was selected for DALL·E 2. The diffusion prior is trained on a decoder-only Transformertransformer with a casual attention mask on a sequence that consists of the following:

- theThe encoded text

- theThe CLIP text embedding

- anAn embedding for the diffusion timestep

- theThe noised CLIP image embedding

- aA final embedding whose output from the Transformer is used to predict the unnoised CLIP image embedding

The DALL·E 2. model allows any image to be encoded into a bipartite latent representation (zi, xT) sufficient for the decoder to produce an accurate reconstruction. ziZi describes the aspects of the image that are recognized by CLIP, while xT encodes all of the residual information necessary for the decoder to reconstruct the image, x. The bipartite latent representations are obtained by encoding the image with the CLIP image encoder and applying denoising diffusion implicit model (DDIM) x using the decoder while conditioning on zi. Using these bipartite representations, the model can produce different kinds of manipulations, including the following:

- Variations—producing related images that share the same defining content with variations in other aspects, such as shape and orientation. This is performed by applying the decoder to the bipartite representation using DDIM, introducing stochasticity into reconstructing the original image.

The link between text and visuals is produced using CLIP, which is trained on millions of images and associated captions to learn how much each word relates to different images. CLIP does not predict a caption for a given image,; instead, it quantifies the relationship between any given caption and an image. CLIP is what ultimately determines how semantically related a text caption is to a visual concept.

The image generations endpoint allows users to create an original image given a text prompt. These can have a size of 256x256, 512x512, or 1024x1024 pixels, with smaller sizes faster to generate. Users can request 1-10 images at a time, which are returned as either a URL (expires after an hour) or Base64 data, using the response_format parameter.

OpenAI worked with early customers to build apps and products utilizing DALL-E 2, including those below:

- OpenAI investor, Microsoft, adding DALL·E to a new graphic design app called Designer, which creates social media posts, invitations, digital postcards, graphics, and more, and integrating it into Bing and Microsoft Edge with Image Creator, allowing users to generate their own images if they struggle to find what they are looking for on the web.

- CALA, a fashion and lifestyle operating system with AI tools powered by DALL·E to generate design ideas from text descriptions or uploaded reference images.

- Mixtiles, a photo startupstart-up using the DALL·E API to create artwork guided by customers through a process that captures childhood memories, dream holidays, and more.

Other use cases include the following:

- Stitch Fix, an online personal styling service experimenting with DALL·E 2 to visualize products whichthat a stylist can match with similar products.

- Heinz usedusing DALL·E 2 for a "Draw Ketchup" marketing campaign, asking fans to send their own prompts to generate images of ketchup bottles.

- Open-source project OctoSQL usedusing DALL·E 2 to create its logo.

- Deephaven tested using DALL·E 2 to generate thumbnails for the company's blog.

One of the main use cases for DALL·E 2 is art. As part of the research preview, when access to DALL·E 2 was limited, OpenAI invited more than 3,000 artists from more than 118 countries to incorporate the model into their creative workflows. Creatives now using DALL·E include AR designers, landscape architects, tattoo artists, clothing designers, directors, sound designers, and dancers, and much more. During the announcement of the DALL·E beta, OpenAI announced subsidized access for qualifying artists by filling out an interest form.

OpenAI maintains a content policy that users must adhere to when using its generative AI tools. This includes not attempting to create, upload, or share images considered "not G-rated" or that may cause harm. Specific areas covered by the content policy include those below:

- Hate—hateful symbols, negative stereotypes, comparing certain groups to animals/objects, or otherwise expressing or promoting hate based on identity.

- Harassment—mocking, threatening, or bullying an individual.

- Violence—violent acts and the suffering or humiliation of others.

- Self-harm—suicide, cutting, eating disorders, and other attempts at harming oneself.

- Sexual—nudity, sexual acts, sexual services, or content otherwise meant to arouse sexual excitement.

- Shocking—bodily fluids, obscene gestures, or other profane subjects that may shock or disgust.

- Illegal activity—drug use, theft, vandalism, and other illegal activities.

- Deception—major conspiracies or events related to major ongoing geopolitical events.

- Political—politicians, ballot boxes, protests, or other content that may be used to influence the political process or to campaign.

- Public and personal health—the treatment, prevention, diagnosis, or transmission of diseases, or people experiencing health ailments.

- Spam—unsolicited bulk content.

- upload images of people without their consent,

- upload images to which you do not hold appropriate usage rights, or

- create images of public figures.

OpenAI uses a mix of automated and human monitoring systems to enforce its content policy. The company employs prompt- and image-level filters with DALL·E 2, and the company has focused research efforts on diversifying the images produced by the model.

On June 28, 2022, OpenAI released information on pre-training mitigations, a subset of the guardrails in place to prevent images from violating its content policy. Specifically, these are the guardrails that directly modify the data DALL·E 2 learns from, removing and reweighting captioned images from the hundreds of millions the model is trained on. The post was organized into three sections:

Critics of the technology say beyond trademark infringements, the system could also threaten the livelihood of artists. DALL·E 2 replicatereplicates the style of specific artics, even those who haven't consented to their work being used for the model's training.

Shutterstock announced it would start using DALL·E 2 to generate content while simultaneously launching a contributor fund to reimburse the creators when the company sells work to train generative AI systems. As of November 2022, OpenAI has not stated whether it will participate with Source+, an initiative looking to allow people to prevent their work or likeness from being used for AI training purposes.

DALL·E 2 works by first training a diffusion decoder to invert a CLIP image encoder. Its inverter is non-deterministic, producing multiple images for a given image embedding. Utilizing an encoder and decoder also allows for applications beyond text-to-image translation., as is the case for Generative Adversarial Network (GAN) inversion can produceproducing semantically similar output images when encoding and decoding an input. The model can also interpolate between input images by inverting interpolations of their image embeddings. However, an advantage of using CLIP's latent space is the ability to modify images by moving in the direction of any encoded text vector.

The DALL·E 2. model allows any image to be encoded into a bipartite latent representation (zi, xT) sufficient for the decoder to produce an accurate reconstruction. zi describes the aspects of the image that are recognized by CLIP, while xT encodes all of the residual information necessary for the decoder to reconstruct the image, x. The bipartite latent representations are obtained by encoding the image with the CLIP image encoder and applying denoising diffusion implicit model (DDIM) x using the decoder while conditioning on zii. Using these bipartite representations the model can produce different kinds of manipulations, including:

- Variations—producing related images that share the same defining content with variations in other aspects such as shape and orientation. This is performed by applying the decoder to the bipartite representation using DDIM, introducing stochasticity into reconstructing the original image.

- Interpolations—blending two images for variations, traversing all the concepts occurring between them in CLIP's embedding space.

- Text Diffs—language-guided image manipulations, modifying an image based on a new text description.

A simplified version of how DALL·E 2 generates images based on text captions is:

- The encoder takes the text input, mapping the prompt to the representation space

- The prior maps this representation to a corresponding image encoding to capture the semantic information of the text input.

- The decoder stochastically generates an image that visually manifests the semantic information.

The link between text and visuals is produced using CLIP which is trained on millions of images and associated captions to learn how much each word relates to different images. CLIP does not predict a caption for a given image, instead, it quantifies the relationship between any given caption and an image. CLIP is what ultimately determines how semantically related a text caption is to a visual concept.

OpenAI maintains a content policy that users must adhere to when using its generative AI tools. This includes not attempting to create, upload, or share images considered "not G-rated" or that may cause harm. Specific areas covered by the content policy include:

- Hate—hateful symbols, negative stereotypes, comparing certain groups to animals/objects, or otherwise expressing or promoting hate based on identity.

- Harassment—mocking, threatening, or bullying an individual.

- Violence—violent acts and the suffering or humiliation of others.

- Self-harm—suicide, cutting, eating disorders, and other attempts at harming oneself.

- Sexual—nudity, sexual acts, sexual services, or content otherwise meant to arouse sexual excitement.

- Shocking—bodily fluids, obscene gestures, or other profane subjects that may shock or disgust.

- Illegal activity—drug use, theft, vandalism, and other illegal activities.

- Deception—major conspiracies or events related to major ongoing geopolitical events.

- Political—politicians, ballot boxes, protests, or other content that may be used to influence the political process or to campaign.

- Public and personal health—the treatment, prevention, diagnosis, or transmission of diseases, or people experiencing health ailments.

- Spam—unsolicited bulk content.

OpenAI also encourages users to disclose when AI is used in their work. Users may remove the DALL·E signature (watermark logo), but they should not mislead others about the creation of the work. For example, not telling people the work was entirely human-generated or the work is a photo of a real event. The content policy also refers to the rights of others, asking users to not:

- upload images of people without their consent

- upload images to which you do not hold appropriate usage rights

- create images of public figures

OpenAI uses a mix of automated and human monitoring systems to enforce its content policy. The company employs prompt- and image-level filters with DALL·E 2 and the company has focused research efforts on diversifying the images produced by the model.

On June 28, 2022, OpenAI released information on pre-training mitigations, a subset of the guardrails in place to prevent images from violating its content policy. Specifically, these are the guardrails that directly modify the data DALL·E 2 learns from, removing and reweighting captioned images from the hundreds of millions the model is trained on. The post was into three sections:

- Reducing graphic and explicit training data

- Fixing bias introduced by data filters

- Preventing image regurgitation

There has been criticism of OpenAI for releasing its generative AI tools without sufficient consideration for the ethical and legal issues they produce. In September 2022, Getty Images banned the upload and sale of images generated using DALL·E 2 and other generative AI tools. Getty CEO Craig Peters stated:

There are real concerns with respect to the copyright of outputs from these models and unaddressed rights issues with respect to the imagery, the image metadata and those individuals contained within the imagery... We are being proactive to the benefit of our customers.

Critics of the technology say beyond trademark infringements, the system could also threaten the livelihood of artists. DALL·E 2 replicate the style of specific artics even those who haven't consented to their work being used for the model's training.

Shutterstock announced it would start using DALL·E 2 to generate content while simultaneously launching a contributor fund to reimburse the creators when the company sells work to train generative AI systems. As of November 2022 OpenAI has not stated whether it will participate with Source+, an initiative looking to allow people to prevent their work or likeness being used for AI training purposes

June 28, 2022

Specifically, the pre-training mitigations refer to guardrails that directly modify the data DALL·E 2 learns from, removing and reweighting captioned images from the hundreds of millions the model is trained on.

The DALL·E 2 model is detailed in a paper published by OpenAI researchers titled "Hierarchical Text-Conditional Image Generation with CLIP Latents." The paper describes how DALL·E 2 combines two processes for text-conditional image generation: diffusion models and OpenAI's neural network CLIP, which is capable of learning visual concepts from natural language supervision. CLIP is described in detail in the February 2021 paper "Learning Transferable Visual Models From Natural Language Supervision," by Radford et al.

DALL·E 2 works by first training a diffusion decoder to invert a CLIP image encoder. Its inverter is non-deterministic, producing multiple images for a given image embedding. Utilizing an encoder and decoder also allows for applications beyond text-to-image translation. as is the case for Generative Adversarial Network (GAN) inversion can produce semantically similar output images when encoding and decoding inan input. The model can also interpolate between input images by inverting interpolations of their image embeddings. However, an advantage of using CLIP's latent space is the ability to modify images by moving in the direction of any encoded text vector.

- Decoder P(x|zi,y) which produces images x conditioned on CLIP image embeddings zi and text captions y, (the use of text captions is optional).

While the decoder can invert CLIP image embeddings (zi) to output images (x), to enable image generation from text captions a prior model is required that can produce the CLIP image embedding. The DALL·E 2 developers experimented with two types of models for the prior:

- Autoregressive (AR) prior—CLIP image embedding is converted into a sequence of discrete codes and predicted autoregressively conditioned on the text caption.

- Diffusion prior—Continuous vector (zi) is directly modeled using a Gaussian diffusion model conditioned on the text caption.

The paper showed both models yielded comparable performance, given the diffusion prior is computationally more efficient it was selected for DALL·E 2. The diffusion prior is trained on a decoder-only Transformer with a casual attention mask on a sequence that consists of:

- the encoded text

- the CLIP text embedding

- an embedding for the diffusion timestep

- the noised CLIP image embedding

- a final embedding whose output from the Transformer is used to predict the unnoised CLIP image embedding

Quality is improved during sampling time by generating two samples of zi and selecting which has a higher dot product with zt. The model is trained to predict the unnoised zi directly using a mean-squared error loss equation.

The DALL·E 2. model allows any image to be encoded into a bipartite latent representation (zi, xT) sufficient for the decoder to produce an accurate reconstruction. zi describes the aspects of the image that are recognized by CLIP, while xT encodes all of the residual information necessary for the decoder to reconstruct the image, x. The bipartite latent representations are obtained by encoding the image with the CLIP image encoder and applying denoising diffusion implicit model (DDIM) x using the decoder while conditioning on zi.

February 26, 2021

CLIP is a neural network developed by OpenAI which can learn visual concepts from natural language descriptions.

Rather than inputting a natural language description to generate an image, users can prompt DALL·E 2 with an image to create either variations of the image or edits based on an accompanying text description. Both variations and edits return three images.

Variations allow users to input their initial generation to get closer to the desired image as well as inputting free-to-use art or self-generated images. Users cannot add a text prompt to guide how DALL·E 2 changes the output.

Rather than inputting a natural language description to generate an image, users can prompt DALL·E 2 with an image to create either variations of the image or edits based on an accompanying text description. Both variations and edits return three images. Variations allow users to input their initial generation to get closer to the desired image as well as inputting free-to-use art or self-generated images. Users cannot add a text prompt to guide how DALL·E 2 changes the output. Edits allow users to provide an image with blank space and a text prompt to complete the image. Edits allow users to introduce new elements to existing images, remove the background from images, or put the subject in a new environment. DALL·E 2 only changes the transparent pixels (erased section) within the image.

DALL·E 2, the second iteration of the tool, was launched on April 6, 2022. In order to learn about the technology, including how it is used and its capabilities and limitations DALL·E 2 launched with access initially limited to trusted users only. The phased deployment of DALL·E 2 is intended to allow OpenAI to develop safeguards preventing harmful image generation and curbing misuse of the tool. During the DALL·E 2's initial use, OpenAI asked users to not share photorealistic generations that include faces and to flag any problematic generations, to limit potential harm.

On July 20, 2022, OpenAI announced DALL·E 2 was now available in beta with plans for a million users from the waiting list to be invited to use the platform over the coming weeks. Every user will receive 50 free credits during their first month with 15 free credits added every subsequent month. Each credit allows for one original DALL·E 2 prompt generation (returning four images) or an edit/variation prompt which returns three images. During the first phase of the beta users can buy additional credits in increments of 115 credits for $15. From the release of the beta, users receive full usage rights to commercialize the images they create with DALL·E 2 including reprinting, selling, and merchandising. These rights also apply to previously generated images during the research preview.

The DALL·E 2 model is detailed in a paper published by OpenAI researchers titled "Hierarchical Text-Conditional Image Generation with CLIP Latents." The paper describes how DALL·E 2 combines two processes for text-conditional image generation diffusion models and OpenAI's neural network CLIP, capable of learning visual concepts from natural language supervision. CLIP is described in detail in the February 2021 paper "Learning Transferable Visual Models From Natural Language Supervision," by Radford et al.

DALL·E 2 works by first training a diffusion decoder to invert a CLIP image encoder. Its inverter is non-deterministic, producing multiple images for a given image embedding. Utilizing an encoder and decoder also allows for applications beyond text-to-image translation. as is the case for Generative Adversarial Network (GAN) inversion can produce semantically similar output images when encoding and decoding in input. The model can also interpolate between input images by inverting interpolations of their image embeddings. However, an advantage of using CLIP's latent space is the ability to modify images by moving in the direction of any encoded text vector.

The model's training dataset consists of pairs of images (x) and their corresponding captions (y). If a given image x has a CLIP image (zi) and text embedding (zt) then the model's generative stack produces images from captions using two components:

- Prior P(zi|y) which generates CLIP image embeddings zi conditioned on captions y.

- Decoder P(x|zi,y) which produces images x conditioned on CLIP image embeddings zi and text captions y, the use of text captions is optional.

The decoder inverts images given their CLIP image embedding while the prior learns a generative model of the image embedding itself. Stacking the two components produces a generative model P(x|y) of images x for captions y:

The first equality holds as zi is a deterministic function of x and the second holds because of the chain rule. Therefore, it is possible to sample the true conditional distribution P(x|y) by sampling zi using the prior, then sampling x using the decoder.

DALL·E 2's decoder uses diffusion models defined in "Denoising Diffusion Probabilistic Models" by Ho et al. and "Improved Techniques for Training Score-Based Generative Models" by Yang Song and Stefano Ermon. Specifically, the decoder modifies the GLIDE (Guided Language-to-Image Diffusion for Generation and Editing) architecture, also developed by OpenAI, described in "GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models" by Nichol et al. DALL·E 2 modifies GLIDE by adding CLIP embeddings to timestep embedding and projecting CLIP embeddings into four extra tokens of context that are concatenated to the sequence of outputs from the GLIDE text encoder.

Previous work using diffusion models has shown using guidance on the conditioning information, improves sample quality rather than directly sampling the conditional distribution of the decoder. Classifier-free guidance is enabled by randomly setting the CLIP embeddings to zero 10% of the time and randomly dropping the text caption 50% of the time during training. High-resolution images are trained using two diffusion upsampler models, one to generate 256x256 images from 64x64 and one to further upsample to 1024x1024 resolution. The robustness of these upsamplers are improved by slightly corrupting the conditioning images during training. This includes gaussian blur during the first stage and a more diverse degradation.

OpenAI worked with some early customers to build apps and products utilizing DALL-E 2, including:

DALL·E 2 is an AI system created by OpenAI that is capable of generating images from a natural language description. DALL·E 2 combines concepts, attributes, and styles to create original images and art from a text description, make edits to existing images, create variations of existing images, and expand existing images beyond the original canvas. The model uses a process called "diffusion" to learn the relationship between images and the text describing them. What starts as a pattern of random dots gradually refines toward an image based on the description.

While access was initially limited to trusted users and those on the waiting list, the DALL·E 2 beta became available to anyone online on September 28, 2022. Users can sign up to use DALL·E 2 on the Open AI website. The model runs in the browser with images created by typing a descriptive prompt up to 400 characters. DALL·E 2 offers users a limited number of monthly credits with the option to pay for more. The DALL·E 2 API public beta became available on November 3, 2022, allowing developers to integrate the model into their own apps or products.

DALL·E 2 allows users to create images and art from a text description. As well as describing the desired image, users can describe the style of the image they want. For example, adding "in a photorealistic style," "in the style of..." for a famous artist, or "as a pencil drawing" to the text description. With each input, the model returns four images based on the text description. The images are 1024x1024 and can be downloaded as a PNG file or shared using a URL.

Rather than inputting a natural language description to generate an image, users can prompt DALL·E 2 with an image to create either variations of the image or edits based on an accompanying text description. Both variations and edits return three images. Variations allow users to input their initial generation to get closer to the desired image as well as inputting free-to-use art or self-generated images. Users cannot add a text prompt to guide how DALL·E 2 changes the output. Edits allow users to provide an image with blank space and a text prompt to complete the image. Edits allow users to introduce new elements to existing images, remove the background from images, or put the subject in a new environment. DALL·E 2 only changes the transparent pixels (erased section) within the image.

Outpainting allows users to expand an existing image beyond its current boundaries to create a larger image. With outpainting it is possible to adjust the framing of images, fill in sections that were originally cropped out, or to experiment with existing art.

Example of DALL·E 2's outpainting feature using the Mona Lisa.

All prompts and images are filtered based on OpenAI's content policy, returning an error if a prompt or image is flagged.

The price of the DALL·E 2 API is determined per image depending on the resolution with a 1024x2024 costing $0.02 per image, 512x512 $0.018 per image, and 256x256 $0.016 per image. All prompts and images are filtered based on OpenAI's content policy, returning an error if a prompt or image is flagged. While DALL·E 2 images have a watermark (colored squared described as DALL·E's "signature") in the bottom-right corner of the images it creates, when using the API this feature is optional. OpenAI first introduced watermarking during the DALL·E 2 beta to indicate whether an image originated from the model.

OpenAI worked with some early customers to build apps and products utilizing DALL-E 2, including:

- OpenAI investor, Microsoft adding DALL·E to a new graphic design app called Designer, which creates social media posts, invitations, digital postcards, graphics, and more, and integrating it into Bing and Microsoft Edge with Image Creator, allowing users to generate their own images if they struggle to find what they are looking for on the web.

- CALA a fashion and lifestyle operating system with AI tools powered by DALL·E to generate design ideas from text descriptions or uploaded reference images.

- Mixtiles a photo startup using the DALL·E API to create artwork guided by customers through a process that captures childhood memories, dream holidays, and more.

Other use cases include:

- Stitch Fix an online personal styling service experimenting with DALL·E 2 to visualize products which a stylist can match with similar products.

- Heinz used DALL·E 2 for a "Draw Ketchup" marketing campaign, asking fans to send their own prompts to generate images of ketchup bottles.

- Open-source project OctoSQL used DALL·E 2 to create its logo.

- Deephaven tested using DALL·E 2 to generate thumbnails for the company's blog.

One of the main use cases for DALL·E 2 is art. As part of the research preview, when access to DALL·E 2 was limited, OpenAI invited more than 3,000 artists from more than 118 countries to incorporate the model into their creative workflows. Creatives now using DALL·E include AR designers, landscape architects, tattoo artists, clothing designers, directors, sound designers, dancers, and much more. During the announcement of the DALL·E beta, OpenAI announced subsidized access for qualifying artists by filling out an interest form.

Dall-e 2 Dall-E 2

DALL·E 2 is an AI system created by OpenAI that is capable of generating images from a natural language description.

DALL·E 2 is an AI system created by OpenAI that is capable of generating images from a natural language description. DALL·E 2 combines concepts, attributes, and styles to create original images and art from a text description, make edits to existing images, create variations of existing images, and expand existing images beyond the original canvas. The model uses a process called "diffusion" to learn the relationship between images and the text describing them. What starts as a pattern of random dots gradually refines toward an image based on aspects defined by the description.

Comparison of the outputs for DALL·E 1 and 2 given the same description.

DALL·E 2 became available to anyone online on September 28, 2022. Users can sign up to use DALL·E 2 on the Open AI website. The model runs in the browser with images created by typing a descriptive prompt up to 400 characters. Originally free to use (until July 2022), DALL·E 2 now offers users a limited number of monthly credits with the option to pay for more.

On April 13, 2022, a team of researchers from OpenAI: Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen published a paper titled "Hierarchical Text-Conditional Image Generation with CLIP Latents." The paper describes the two-stage model leveraged for image generation. The first stage consists of a prior that generates a CLIP image embedding from a given text caption. CLIP stands for Contrastive Language-Image Pre-training, a neural network developed by OpenAI which can learn visual concepts from natural language descriptions. The second stage is a decoder, using diffusion models, that generates an image conditioned on the image embedding. The paper shows the new image generation model improves image diversity with minimal loss in photorealism and caption similarity.

DALL·E 2 is now part of the OpenAI API for developers to start integrating the technology into their own projects. DALL·E 2 joins other OpenAI models in the API platform, including GPT-3, Embeddings, and Codex. OpenAI's images API powered by DALL·E 2 provides three methods for interacting with images:

- Generating images from a text prompt

- Editing an existing image based on a new text prompt

- Variations of an existing image

All prompts and images are filtered based on OpenAI's content policy, returning an error if a prompt or image is flagged.

The image generations endpoint allows users to create an original image given a text prompt. These can have a size of 256x256, 512x512, or 1024x1024 pixels, with smaller sizes faster to generate. Users can request 1-10 images at a time which are returned as either a URL (expires after an hour) or Base64 data, using the response_format parameter.

The image edits endpoint allows users to edit or extend an image by uploading a mask. Transparent areas of the mask indicate where the image should be edited, and the prompt provided should describe the full new image, not only the erased area. Both the uploaded image and mask must be square PNG images with the same dimensions and be less than 4MB in size. Given the non-transparent areas of the mask are not used to generate the output, they do not need to match the original image.

Example of OpenAI's API image edits endpoint with the prompt.

The image variations endpoint allows users to generate variations of a given image. The input image must be a square PNG image less than 4MB in size.

Example of OpenAI's API image variations endpoint.

April 13, 2022

The paper describes the two-stage model leveraged for image generation for DALL·E 2.

DALL·E 2 is an AI system created by OpenAI that is capable of generating images from a natural language description. Created by OpenAI, DALL·E 2 combines concepts, attributes, and styles to create original images and art from a text description, make edits to existing images, create variations of existing images, and expand existing images beyond the original canvas. The model uses a process called "diffusion" to learn the relationship between images and the text describing them. What starts as a pattern of random dots gradually refines toward an image based on aspects defined by the description. The name, DALL·E, is a portmanteau of 'Dali' (from the artist Salvador Dali) and Pixar's 'WALL-E'.

The name, DALL·E, is a portmanteau of 'Dali' (from the artist Salvador Dali) and Pixar's 'WALL-E'. OpenAI introduced DALL·E in January 2021. ThisDALL·E 2 was followed by the second iteration of the tool DALL·E 2launched on April 6, 2022, which sawoffering significant improvements compared to the original. DALL·E would take a significant time to return images and had issues producing grainy images. DALL·E 2, generates images with greater realism and accuracy as well as 4x greater resolution. Evaluators were asked to compare 1,000 image generations from both DALL·E 1 and DALL·E 2, preferred the second iteration with 71.7% preferringpreferred itDALL·E 2 for caption matching and 88.8% preferringpreferred it for photorealism.

DALL·E 2 remains in beta with access initially limited due to a long waiting list. On September 29, 2022, OpenAI announced the removal of the waiting list for the DALL·E 2 beta, allowing users to sign up and start using the tool immediately. As of September 29, 2022, the model had more than 1.5 million users generating over 2 million images a day.

DALL·E 2 became available to anyone online on September 28, 2022. Users can sign up to use DALL·E 2 on the Open AI website. The model runs in the browser with images created by typing a descriptive prompt up to 400 characters. Originally free to use (until July 2022), DALL·E 2 now offers users a limited number of monthly credits with the option to pay for more.

OpenAI introduced DALL·E in January 2021, a neural network that can create images from text captions. A 12 billion parameter version of the GPT-3 model, DALL·E is trained to generate images from text descriptions using a dataset of text-image pairs. Like GPT-3, DALL·E is also a transformer language model, receiving both the text and the image as a single stream of data containing up to 1280 tokens. The model is trained using the maximum likelihood to generate all of the tokens, one after another.

DALL·E 2, the second iteration of the tool, was launched on April 6, 2022. In order to learn about the technology, including how it is used and its capabilities and limitations DALL·E 2 launched with access initially limited to trusted users only. The phased deployment of DALL·E 2 is intended to allow OpenAI to develop safeguards preventing harmful image generation and curbing misuse of the tool. During the DALL·E 2's initial use, OpenAI asked users to not share photorealistic generations that include faces and to flag any problematic generations, to limit potential harm.

On May 18, 2022, OpenAI published a research preview update on the early use of the DALL·E 2 beta. The update found:

- Users had collectively created over 3 million images

- Less than 0.05% of downloaded or publicly shared images were flagged as potentially violating OpenAI's content policy

- Upon human review, roughly 30% of flagged images were confirmed to violate policy leading to an account deactivation

Users can sign up to use DALL·E 2 on the Open AI website. The model runs in the browser with images are created by typing a descriptive prompt up to 400 characters. OpenAI is continuously developing safeguards related to preventing harmful image generation and curbing misuse. This has led to a phased deployment based on learning new information regarding its use. Originally free to use (until July 2022), DALL·E 2 now offers users a limited number of monthly credits with the option to pay for more.

After the update in May 2022, OpenAI announced its intentions to onboard an additional 1,000 users per week from the waiting list while they iterate the safety system.

On July 20, 2022, OpenAI announced DALL·E 2 was now available in beta with plans for a million users from the waiting list to be invited to use the platform over the coming weeks. Every user will receive 50 free credits during their first month with 15 free credits added every subsequent month. Each credit allows for one original DALL·E 2 prompt generation (returning four images) or an edit/variation prompt which returns three images. During the first phase of the beta users can buy additional credits in increments of 115 credits for $15. From the release of the beta, users receive full usage rights to commercialize the images they create with DALL·E 2 including reprinting, selling, and merchandising. These rights also apply to previously generated during the research preview.

On August 31, 2022, OpenAI announced the new outpainting feature which allows users to extend existing images beyond the current borders, adding new visual elements in the same style or a new direction using a text description.

On September 28, 2022, OpenAI announced the removal of the waiting list for the DALL·E 2 beta, allowing users to sign up and start using the tool immediately. At the time of the announcement, the model had more than 1.5 million users generating over 2 million images a day.

On November 3, 2022, OpenAI released the DALL·E API public beta, allowing developers to integrate the tool into their apps and products.

November 3, 2022

September 29, 2022

Since its release, DALL·E 2 has been used by more than 1.5 million users to generate over 2 million images a day.

September 28, 2022

Since its release, DALL·E 2 has been used by more than 1.5 million users to generate over 2 million images a day.

August 31, 2022

The feature allows users to extend existing images beyond the current borders, adding new visual elements in the same style or a new direction using a text description.

July 20, 2022

OpenAI also announces users will have free credits to use each month with a fee to generate additional images.

May 18, 2022

The update states 3 million images had been created, with 0.05% violating OpenAI's content policies. OpenAI plans to continue developing its content policy while onboarding up to 1,000 users from the waiting list every week.

April 6, 2022

The model is only availableopen to peoplea onlimited thenumber waitingof listtrusted users.

Dall-E 2

DALL·E 2 is a newan AI system created by OpenAI that canis createcapable realisticof generating images and art from a natural language description in natural language.

DALL·E 2 is an AI system created by OpenAI that is capable of generating images from a natural language description. Created by OpenAI, DALL·E 2 combines concepts, attributes, and styles to create original images and art from a text description, make edits to existing images, create variations of existing images, and expand existing images beyond the original canvas. The model uses a process called "diffusion" to learn the relationship between images and text describing them. What starts as a pattern of random dots gradually refines toward an image based on aspects defined by the description. The name, DALL·E, is a portmanteau of 'Dali' (from the artist Salvador Dali) and Pixar's 'WALL-E'.

OpenAI introduced DALL·E in January 2021. This was followed by the second iteration of the tool DALL·E 2 on April 6, 2022, which saw significant improvements compared to the original. DALL·E would take a significant time to return images and had issues producing grainy images. DALL·E 2, generates images with greater realism and accuracy as well as 4x greater resolution. Evaluators asked to compare 1,000 image generations from DALL·E 1 and DALL·E 2, preferred the second iteration with 71.7% preferring it for caption matching and 88.8% preferring it for photorealism.

DALL·E 2 remains in beta with access initially limited due to a long waiting list. On September 29, 2022, OpenAI announced the removal of the waiting list for the DALL·E 2 beta, allowing users to sign up and start using the tool immediately. As of September 29, 2022, the model had more than 1.5 million users generating over 2 million images a day.

Users can sign up to use DALL·E 2 on the Open AI website. The model runs in the browser with images are created by typing a descriptive prompt up to 400 characters. OpenAI is continuously developing safeguards related to preventing harmful image generation and curbing misuse. This has led to a phased deployment based on learning new information regarding its use. Originally free to use (until July 2022), DALL·E 2 now offers users a limited number of monthly credits with the option to pay for more.

September 29, 2022

Since its release, DALL·E 2 has been used by more than 1.5 million users to generate over 2 million images a day.

April 6, 2022

The model is only available to people on the waiting list.

January 5, 2021

Dall-E 2

DALL·E 2 is a new AI system that can create realistic images and art from a description in natural language.

Dall-e 2

DALL·E 2 is an AI system created by OpenAI that is capable of generating images from a natural language description.